Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now

Chinese e-commerce giant Alibaba’s “Qwen Team” has done it again.

Mere days after releasing for free and with open source licensing what is now the top performing non-reasoning large language model (LLM) in the world — full stop, even compared to proprietary AI models from well-funded U.S. labs such as Google and OpenAI — in the form of the lengthily named Qwen3-235B-A22B-2507, this group of AI researchers has come out with yet another blockbuster model.

That is Qwen3-Coder-480B-A35B-Instruct, a new open-source LLM focused on assisting with software development. It is designed to handle complex, multi-step coding workflows and can create full-fledged, functional applications in seconds or minutes.

The model is positioned to compete with proprietary offerings like Claude Sonnet-4 in agentic coding tasks and sets new benchmark scores among open models.

It is available on Hugging Face, GitHub, Qwen Chat, via Alibaba’s Qwen API, and a growing list of third-party vibe coding and AI tool platforms.

Open sourcing licensing means low cost and high optionality for enterprises

But unlike Claude and other proprietary models, Qwen3-Coder, which we’ll call it for short, is available now under an open source Apache 2.0 license, meaning it’s free for any enterprise to take without charge, download, modify, deploy and use in their commercial applications for employees or end customers without paying Alibaba or anyone else a dime.

It’s also so highly performant on third-party benchmarks and anecdotal usage among AI power users for “vibe coding” — coding using natural language and without formal development processes and steps — that at least one, LLM researcher Sebastian Raschka, wrote on X that: “This might be the best coding model yet. General-purpose is cool, but if you want the best at coding, specialization wins. No free lunch.”

Developers and enterprises interested in downloading it can find the code on the AI code sharing repository Hugging Face.

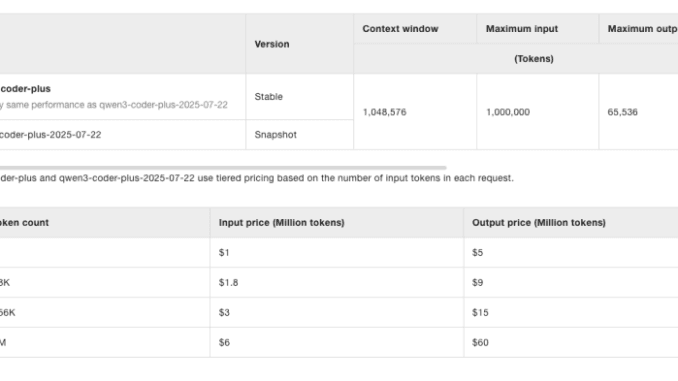

Enterprises who don’t wish to, or don’t have the capacity to host the model on their own or through various third-party cloud inference providers, can also use it directly through the Alibaba Cloud Qwen API, where the per-million token costs start at $1/$5 per million tokens (mTok) for input/output of up to 32,000 tokens, then $1.8/$9 for up to 128,000, $3/$15 for up to 256,000 and $6/$60 for the full million.

Model architecture and capabilities

According to the documentation released by Qwen Team online, Qwen3-Coder is a Mixture-of-Experts (MoE) model with 480 billion total parameters, 35 billion active per query, and 8 active experts out of 160.

It supports 256K token context lengths natively, with extrapolation up to 1 million tokens using YaRN (Yet another RoPE extrapolatioN — a technique used to extend a language model’s context length beyond its original training limit by modifying the Rotary Positional Embeddings (RoPE) used during attention computation. This capacity enables the model to understand and manipulate entire repositories or lengthy documents in a single pass.

Designed as a causal language model, it features 62 layers, 96 attention heads for queries, and 8 for key-value pairs. It is optimized for token-efficient, instruction-following tasks and omits support for <think> blocks by default, streamlining its outputs.

High performance

Qwen3-Coder has achieved leading performance among open models on several agentic evaluation suites:

SWE-bench Verified: 67.0% (standard), 69.6% (500-turn)

GPT-4.1: 54.6%

Gemini 2.5 Pro Preview: 49.0%

Claude Sonnet-4: 70.4%

The model also scores competitively across tasks such as agentic browser use, multi-language programming, and tool use. Visual benchmarks show progressive improvement across training iterations in categories like code generation, SQL programming, code editing, and instruction following.

Alongside the model, Qwen has open-sourced Qwen Code, a CLI tool forked from Gemini Code. This interface supports function calling and structured prompting, making it easier to integrate Qwen3-Coder into coding workflows. Qwen Code supports Node.js environments and can be installed via npm or from source.

Qwen3-Coder also integrates with developer platforms such as:

Claude Code (via DashScope proxy or router customization)

Cline (as an OpenAI-compatible backend)

Ollama, LMStudio, MLX-LM, llama.cpp, and KTransformers

Developers can run Qwen3-Coder locally or connect via OpenAI-compatible APIs using endpoints hosted on Alibaba Cloud.

Post-training techniques: code RL and long-horizon planning

In addition to pretraining on 7.5 trillion tokens (70% code), Qwen3-Coder benefits from advanced post-training techniques:

Code RL (Reinforcement Learning): Emphasizes high-quality, execution-driven learning on diverse, verifiable code tasks

Long-Horizon Agent RL: Trains the model to plan, use tools, and adapt over multi-turn interactions

This phase simulates real-world software engineering challenges. To enable it, Qwen built a 20,000-environment system on Alibaba Cloud, offering the scale necessary for evaluating and training models on complex workflows like those found in SWE-bench.

Enterprise implications: AI for engineering and DevOps workflows

For enterprises, Qwen3-Coder offers an open, highly capable alternative to closed-source proprietary models. With strong results in coding execution and long-context reasoning, it is especially relevant for:

Codebase-level understanding: Ideal for AI systems that must comprehend large repositories, technical documentation, or architectural patterns

Automated pull request workflows: Its ability to plan and adapt across turns makes it suitable for auto-generating or reviewing pull requests

Tool integration and orchestration: Through its native tool-calling APIs and function interface, the model can be embedded in internal tooling and CI/CD systems. This makes it especially viable for agentic workflows and products, i.e., those where the user triggers one or multiple tasks that it wants the AI model to go off and do autonomously, on its own, checking in only when finished or when questions arise.

Data residency and cost control: As an open model, enterprises can deploy Qwen3-Coder on their own infrastructure—whether cloud-native or on-prem—avoiding vendor lock-in and managing compute usage more directly

Support for long contexts and modular deployment options across various dev environments makes Qwen3-Coder a candidate for production-grade AI pipelines in both large tech companies and smaller engineering teams.

Developer access and best practices

To use Qwen3-Coder optimally, Qwen recommends:

Sampling settings: temperature=0.7, top_p=0.8, top_k=20, repetition_penalty=1.05

Output length: Up to 65,536 tokens

Transformers version: 4.51.0 or later (older versions may throw errors due to qwen3_moe incompatibility)

APIs and SDK examples are provided using OpenAI-compatible Python clients.

Developers can define custom tools and let Qwen3-Coder dynamically invoke them during conversation or code generation tasks.

Warm early reception from AI power users

Initial responses to Qwen3-Coder-480B-A35B-Instruct have been notably positive among AI researchers, engineers, and developers who have tested the model in real-world coding workflows.

In addition to Raschka’s lofty praise above, Wolfram Ravenwolf, an AI engineer and evaluator at EllamindAI, shared his experience integrating the model with Claude Code on X, stating, “This is surely the best one currently.”

After testing several integration proxies, Ravenwolf said he ultimately built his own using LiteLLM to ensure optimal performance, demonstrating the model’s appeal to hands-on practitioners focused on toolchain customization.

Educator and AI tinkerer Kevin Nelson also weighed in on X after using the model for simulation tasks.

“Qwen 3 Coder is on another level,” he posted, noting that the model not only executed on provided scaffolds but even embedded a message within the output of the simulation — an unexpected but welcome sign of the model’s awareness of task context.

Even Twitter co-founder and Square (now called “Block”) founder Jack Dorsey posted an X message in praise of the model, writing: “Goose + qwen3-coder = wow,” in reference to his Block’s open source AI agent framework Goose, which VentureBeat covered back in January 2025.

These responses suggest Qwen3-Coder is resonating with a technically savvy user base seeking performance, adaptability, and deeper integration with existing development stacks.

Looking ahead: more sizes, more use cases

While this release focuses on the most powerful variant, Qwen3-Coder-480B-A35B-Instruct, the Qwen team indicates that additional model sizes are in development.

These will aim to offer similar capabilities with lower deployment costs, broadening accessibility.

Future work also includes exploring self-improvement, as the team investigates whether agentic models can iteratively refine their own performance through real-world use.

Be the first to comment